题目

2025FallB-X-CSE571-78760 模块 7: 顺序决策 知识检查 Module 7: Sequential Decision-Making Knowledge Check

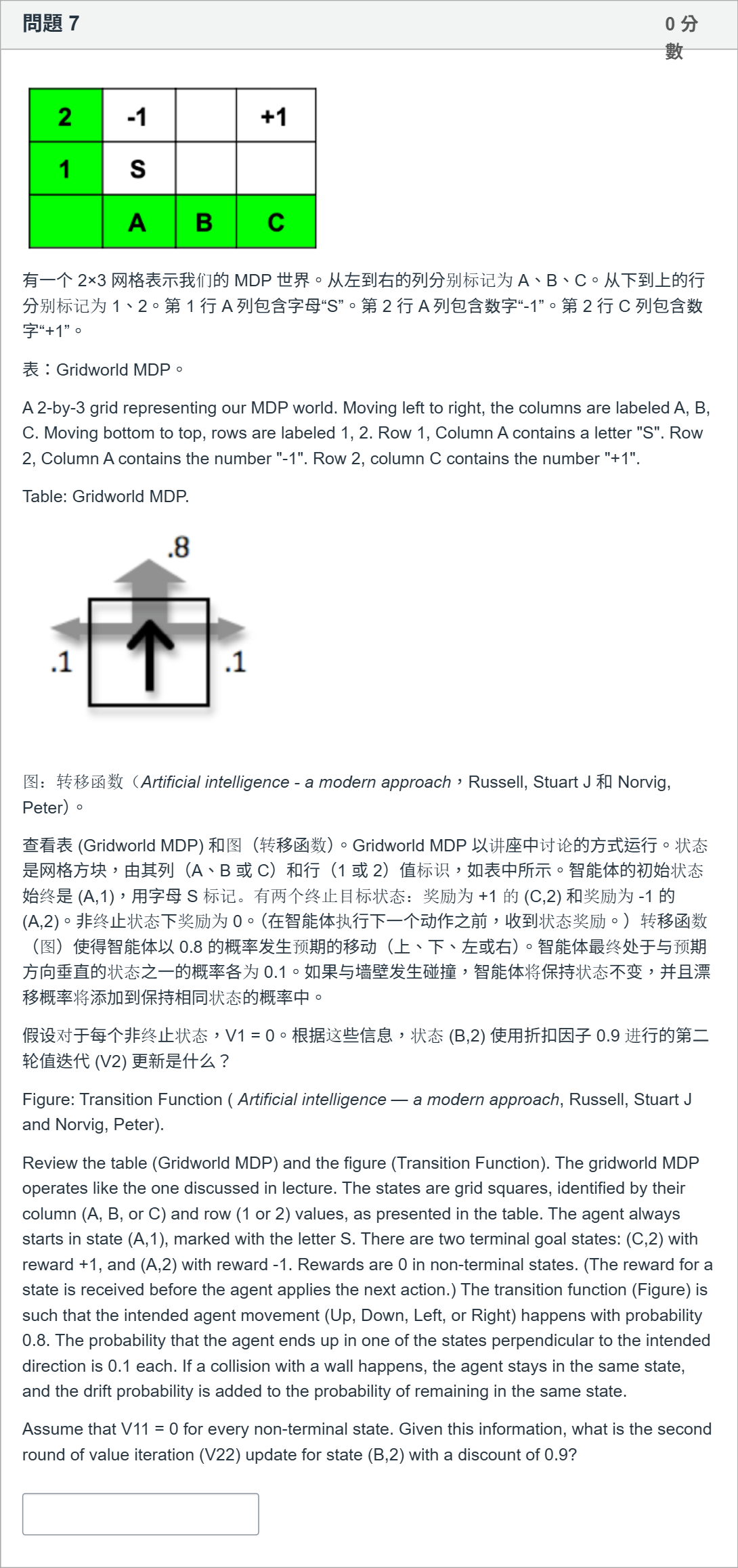

数值题

有一个 2×3 网格表示我们的 MDP 世界。从左到右的列分别标记为 A、B、C。从下到上的行分别标记为 1、2。第 1 行 A 列包含字母“S”。第 2 行 A 列包含数字“-1”。第 2 行 C 列包含数字“+1”。 表:Gridworld MDP。 A 2-by-3 grid representing our MDP world. Moving left to right, the columns are labeled A, B, C. Moving bottom to top, rows are labeled 1, 2. Row 1, Column A contains a letter "S". Row 2, Column A contains the number "-1". Row 2, column C contains the number "+1". Table: Gridworld MDP. 图:转移函数(Artificial intelligence - a modern approach,Russell, Stuart J 和 Norvig, Peter)。 查看表 (Gridworld MDP) 和图(转移函数)。Gridworld MDP 以讲座中讨论的方式运行。状态是网格方块,由其列(A、B 或 C)和行(1 或 2)值标识,如表中所示。智能体的初始状态始终是 (A,1),用字母 S 标记。有两个终止目标状态:奖励为 +1 的 (C,2) 和奖励为 -1 的 (A,2)。非终止状态下奖励为 0。(在智能体执行下一个动作之前,收到状态奖励。)转移函数(图)使得智能体以 0.8 的概率发生预期的移动(上、下、左或右)。智能体最终处于与预期方向垂直的状态之一的概率各为 0.1。如果与墙壁发生碰撞,智能体将保持状态不变,并且漂移概率将添加到保持相同状态的概率中。 假设对于每个非终止状态,V1 = 0。根据这些信息,状态 (B,2) 使用折扣因子 0.9 进行的第二轮值迭代 (V2) 更新是什么? Figure: Transition Function ( Artificial intelligence — a modern approach, Russell, Stuart J and Norvig, Peter). Review the table (Gridworld MDP) and the figure (Transition Function). The gridworld MDP operates like the one discussed in lecture. The states are grid squares, identified by their column (A, B, or C) and row (1 or 2) values, as presented in the table. The agent always starts in state (A,1), marked with the letter S. There are two terminal goal states: (C,2) with reward +1, and (A,2) with reward -1. Rewards are 0 in non-terminal states. (The reward for a state is received before the agent applies the next action.) The transition function (Figure) is such that the intended agent movement (Up, Down, Left, or Right) happens with probability 0.8. The probability that the agent ends up in one of the states perpendicular to the intended direction is 0.1 each. If a collision with a wall happens, the agent stays in the same state, and the drift probability is added to the probability of remaining in the same state. Assume that V11 = 0 for every non-terminal state. Given this information, what is the second round of value iteration (V22) update for state (B,2) with a discount of 0.9?

查看解析

标准答案

Please login to view

思路分析

题目重述与要点

- 给定一个 2×3 的网格世界,状态用列 A,B,C 及行 1,2 表示。起点为 (A,1),终止状态为 (C,2) (+1) 与 (A,2) (-1),非终止状态的奖励为 0。

- 转移函数:想要移动的方向发生概率 0.8,其垂直于该方向的两个方向各自发生概率 0.1;若撞墙则保持在同一状态,保持的概率包括漂移到同一状态的概率。

- 折扣因子 γ = 0.9,且对非终止状态有 V1 = 0。

- 题问:在状态 (B,2) 下,第二轮值迭代 (V22) 的更新值是多少?

- 给定答案为 0.72。下面逐项分析各选项(此题只有一个数值答案,因此将围绕为何得到该值进行完整推导)。

一、对状态 (B,2) 的可能转移与即时奖励的确定

- 从状态 (B,2) 若采取“向上”作为意图方向,墙壁在上方,因此会发生撞墙,智能体保持在 (B,2);该分支的即时奖励来自到达的下一个状态的奖励。在这里,若仍在非终止状态,则即时奖励为 0。

- 若采取“向左”作为意图方向,则移动到 (A,2),这是一个终止状态,即时奖励为 -1。

- 若采取“向右”作为意图方向,则移动到 (C......Login to view full explanation登录即可查看完整答案

我们收录了全球超50000道考试原题与详细解析,现在登录,立即获得答案。

类似问题

表: Gridworld MDP 2 5 1 S -5 A B C 图:转换函数 0.8 ^ 0.1 <- | -> 0.1 查看表 (Gridworld MDP) 和图(转移函数)。Gridworld MDP 以讲座中讨论的方式运行。状态是网格方块,由其行(A、B 或 C)和列(1 或 2)值标识,如表中所示。智能体的初始状态始终是 (A,1),用字母 S 标记。有两个终止目标状态:奖励为 -5 的 (B,1) 和奖励为 +5 的 (B,2)。非终止状态下奖励为 0。(在智能体执行下一个动作之前,收到状态奖励。)转移函数(参见图)使得智能体以 0.8 的概率发生预期的移动(上、下、左或右)。智能体最终处于与预期方向垂直的状态之一的概率各为 0.1。如果与墙壁发生碰撞,智能体将保持状态不变,并且漂移概率将添加到保持相同状态的概率中。 假设 V 1 1(A,1) = 0, V 1 1C,1) = 0, V 1 1(C,2) = 4, V 1 1(A,2) = 4, V 1 1(B,1) = -5,V 1 1(B,2) = +5 根据这些信息,状态 (A,1) 使用折扣因子 0.5 进行的第二轮值迭代 (V2) 更新是什么? Review Table: Gridworld MDP and Figure: Transition Function. The gridworld MDP operates like the one discussed in lecture. The states are grid squares, identified by their column (A, B, or C) and row (1 or 2) values, as presented in the table. The agent always starts in state (A,1), marked with the letter S. There are two terminal goal states: (B,1) with reward -5, and (B,2) with reward +5. Rewards are 0 in non-terminal states. (The reward for a state is received before the agent applies the next action.) The transition function in Figure: Transition Function is such that the intended agent movement (Up, Down, Left, or Right) happens with probability 0.8. The probability that the agent ends up in one of the states perpendicular to the intended direction is 0.1 each. If a collision with a wall happens, the agent stays in the same state, and the drift probability is added to the probability of remaining in the same state. Assume that V 1 (A,1) = 0, V 1 C,1) = 0, V 1 (C,2) = 4, V 1 (A,2) = 4, V 1 (B,1) = -5, and V 1 (B,2) = +5. Given this information, what is the second round of value iteration (V 2 ) update for state (A,1) with a discount of 0.5?

Which of the following algorithms is used to find a policy?

In a consumer society, many adults channel creativity into buying things

Economic stress and unpredictable times have resulted in a booming industry for self-help products

更多留学生实用工具

希望你的学习变得更简单

加入我们,立即解锁 海量真题 与 独家解析,让复习快人一步!