Questions

COMP90054_2025_SM2 Supplementary or Special Exam: AI Planning for Autonomy (COMP90054_2025_SM2)- Requires Respondus LockDown Browser

Single choice

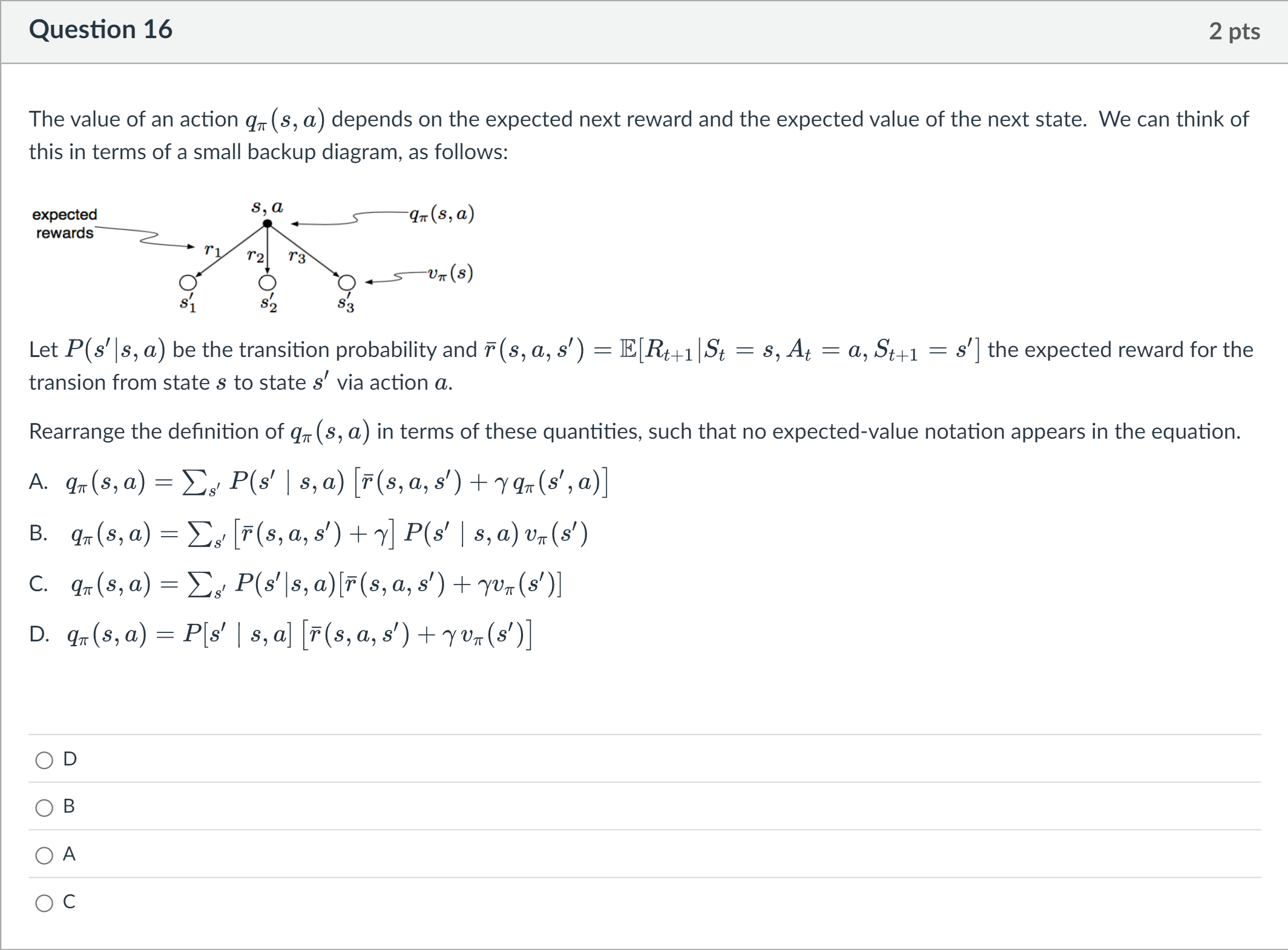

The value of an action 𝑞 𝜋 ( 𝑠 , 𝑎 ) depends on the expected next reward and the expected value of the next state. We can think of this in terms of a small backup diagram, as follows: Let 𝑃 ( 𝑠 ′ | 𝑠 , 𝑎 ) be the transition probability and 𝑟 ¯ ( 𝑠 , 𝑎 , 𝑠 ′ ) = 𝐸 [ 𝑅 𝑡 + 1 | 𝑆 𝑡 = 𝑠 , 𝐴 𝑡 = 𝑎 , 𝑆 𝑡 + 1 = 𝑠 ′ ] the expected reward for the transion from state 𝑠 to state 𝑠 ′ via action 𝑎 . Rearrange the definition of 𝑞 𝜋 ( 𝑠 , 𝑎 ) in terms of these quantities, such that no expected-value notation appears in the equation. A. 𝑞 𝜋 ( 𝑠 , 𝑎 ) = ∑ 𝑠 ′ 𝑃 ( 𝑠 ′ ∣ 𝑠 , 𝑎 ) [ 𝑟 ¯ ( 𝑠 , 𝑎 , 𝑠 ′ ) + 𝛾 𝑞 𝜋 ( 𝑠 ′ , 𝑎 ) ] B. 𝑞 𝜋 ( 𝑠 , 𝑎 ) = ∑ 𝑠 ′ [ 𝑟 ¯ ( 𝑠 , 𝑎 , 𝑠 ′ ) + 𝛾 ] 𝑃 ( 𝑠 ′ ∣ 𝑠 , 𝑎 ) 𝑣 𝜋 ( 𝑠 ′ ) C. 𝑞 𝜋 ( 𝑠 , 𝑎 ) = ∑ 𝑠 ′ 𝑃 ( 𝑠 ′ | 𝑠 , 𝑎 ) [ 𝑟 ¯ ( 𝑠 , 𝑎 , 𝑠 ′ ) + 𝛾 𝑣 𝜋 ( 𝑠 ′ ) ] D. 𝑞 𝜋 ( 𝑠 , 𝑎 ) = 𝑃 [ 𝑠 ′ ∣ 𝑠 , 𝑎 ] [ 𝑟 ¯ ( 𝑠 , 𝑎 , 𝑠 ′ ) + 𝛾 𝑣 𝜋 ( 𝑠 ′ ) ]

Options

A.D

B.B

C.A

D.C

View Explanation

Verified Answer

Please login to view

Step-by-Step Analysis

We begin by identifying the underlyingBellman equation for action values under policy π. The quantity qπ(s, a) is the expected return when taking action a in state s and thereafter following policy π, which can be written as an expectation over next states s' of the immediate reward plus the discounted future value from the next state when continuing with the same action a (since the action chosen at state s is fixed to a). Now we evaluate each option.

......Login to view full explanationLog in for full answers

We've collected over 50,000 authentic exam questions and detailed explanations from around the globe. Log in now and get instant access to the answers!

Similar Questions

Shown is the Q Actor-Critic (QAC) function, with line numbers. 1. Initialise 𝑠 , 𝜃 2. Sample 𝑎 ∼ 𝜋 𝜃 3. for each step do 4. Sample reward 𝑟 = 𝑅 𝑠 𝑎 ; sample transition 𝑠 ′ ∼ 𝑃 𝑠 , ⋅ 𝑎 5. Sample action 𝑎 ′ ∼ 𝜋 𝜃 ( 𝑠 ′ , 𝑎 ′ ) 6. 𝛿 = 𝑟 + 𝛾 𝑄 𝑤 ( 𝑠 ′ , 𝑎 ′ ) − 𝑄 𝑤 ( 𝑠 , 𝑎 ) 7. 𝜃 ← 𝜃 + 𝛼 ∇ 𝜃 𝑙 𝑜 𝑔 𝜋 𝜃 ( 𝑠 , 𝑎 ) 𝑄 𝑤 ( 𝑠 , 𝑎 ) 8. 𝑤 ← 𝑤 + 𝛽 𝛿 𝜙 ( 𝑠 , 𝑎 ) 9. 𝑎 ← 𝑎 ′ , 𝑠 ← 𝑠 ′ 10. end for Which of the following statements is true (can be more than one)?

Which statement best describes the difference between SARSA and Q-learning?

Which of the following best describes a key difference between Monte Carlo and Temporal-Difference (TD) learning?

Select all of the following methods that use bootstrapping to estimate values

More Practical Tools for Students Powered by AI Study Helper

Making Your Study Simpler

Join us and instantly unlock extensive past papers & exclusive solutions to get a head start on your studies!