Questions

Single choice

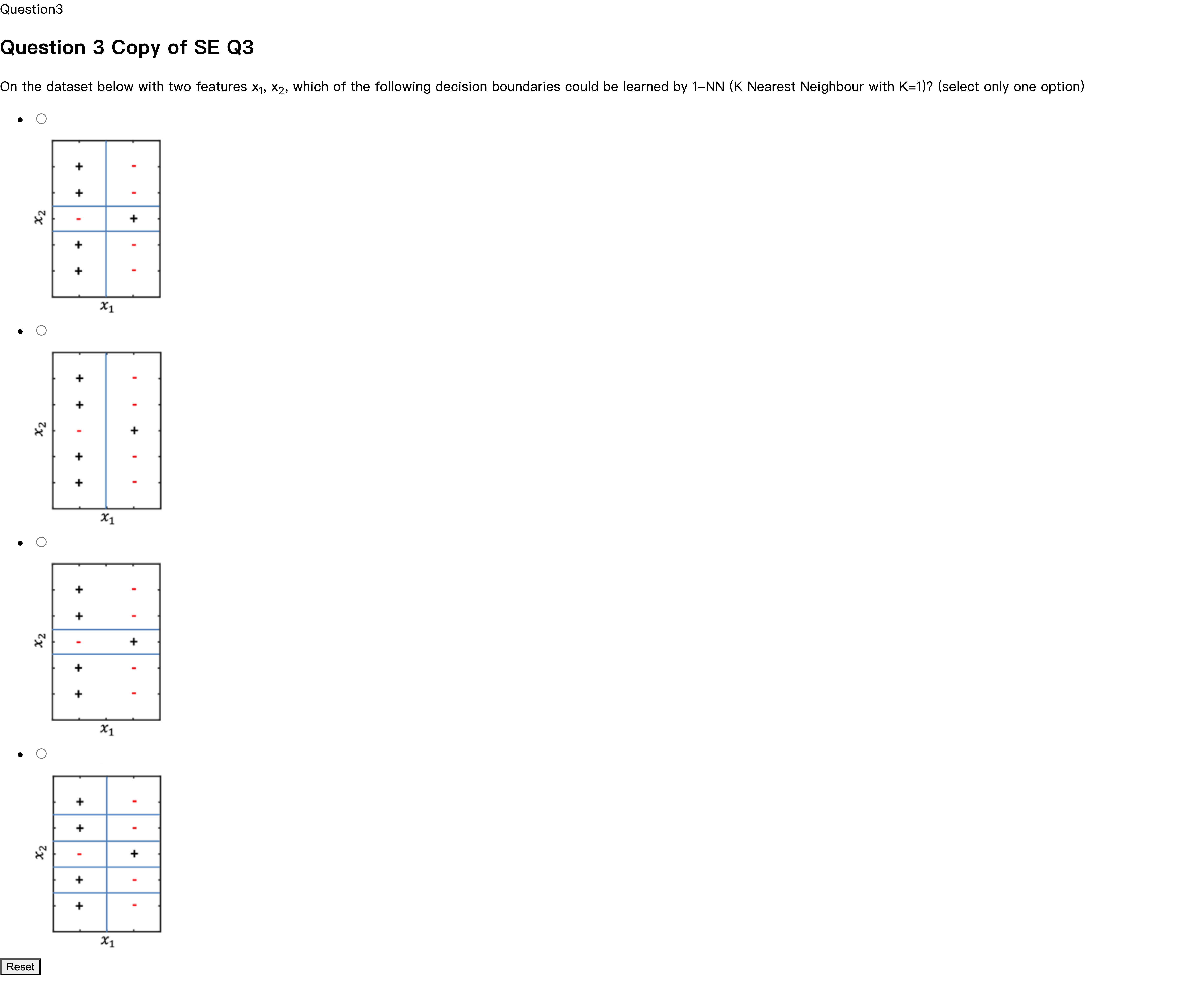

Question3Question 3 Copy of SE Q3 On the dataset below with two features x1, x2, which of the following decision boundaries could be learned by 1-NN (K Nearest Neighbour with K=1)? (select only one option) Reset

Options

A.

B.

C.

D.

View Explanation

Verified Answer

Please login to view

Step-by-Step Analysis

I will begin by noting that the answer options provided are empty in the dataset snippet, so I cannot pinpoint which specific boundary option among the given choices is marked as correct. Nevertheless, I can explain how 1-NN (K = 1) would determine decision boundaries for a two-feature (x1, x2) dataset, which will help you evaluate any concrete option.

General principle of 1-NN with K = 1:

- Each training point becomes a basis for its own Voronoi cell. A new point is labeled according to the label of its nearest neighbor in the training set.

- Consequently, the decision boundary between two classes consists of the set of points equidistant to two nearest training samples of opposite l......Login to view full explanationLog in for full answers

We've collected over 50,000 authentic exam questions and detailed explanations from around the globe. Log in now and get instant access to the answers!

Similar Questions

A mobile phone carrier wants to predict whether a new customer is likely to switch to a competitor in the next three months. They plot customers based on usage patterns and support call frequency. The visualization below shows two groups: customers who stayed (green) and customers who left (orange). The black question mark represents a new customer whose status is unknown. Which method should the team use if they want to classify the new customer based on the behavior of the closest existing customers in the plot?

Assume you work for a large real estate company, and you are required to build a prediction model to predict the listing prices for houses that are being putting up for sale. You have an existing dataset with 5 houses and their prices, and you are trying to predict the listing price for house Z. You decide to train a k-NN model. Below, you have the normalized version of the data. HOUSE Number bedrooms Number bathrooms Patio PRICE A 1 1 1 $650,000 B 1 1 0 $610,000 C 0.5 0 1 $620,000 D 0 0.5 0 $550,000 E 0 0 0 $540,000 Z 0.5 1 1 ??? Additionally, you have the Euclidian distances between the existing houses and the new house, Z. A-Z 0.5 B-Z 1.12 C-Z 1 D-Z 1.52 E-Z 1.23 Assume you run a k-NN model. You set k = 4. What would be the price predicted for house Z?

Question7 Which of the following statements is/are INCORRECT? (you can choose more than one) Choosing different distance measures affect the decision boundary in both 1-NN and 5-NN 5-NN is more robust to outliers than 1-NN 5-NN has lower bias than 1-NN 5-NN handles noise better than 1-NN ResetMaximum marks: 2 Flag question undefined

kNN is an example of ______________________ learning. Select all that apply for full marks.

More Practical Tools for Students Powered by AI Study Helper

Making Your Study Simpler

Join us and instantly unlock extensive past papers & exclusive solutions to get a head start on your studies!